In my previous

blog , I talked about the Fundamentals of VMware SD-WAN technology by VeloCloud focusing on its architecture and use cases.

In this blog, I will be discussing the design I used to connect NSX backed workloads across different Sites/Branches and Clouds using the VMware VeloCloud SD-WAN technology.

My setup consists of the following:

1. NSX-v lab living on Site A. It is backed by a vCenter, management and compute ESXI clusters. Workloads are Virtual Machines.

2. NSX-T lab, that is backed by a management and compute ESXI cluster in addition to a KVM cluster. Workloads are VMs along with Kurbenetes (K8) containers.

3. VMware Cloud on AWS SDDC instance with VC/ESXI/VMs.

Because the SD-WAN technology is agnostic of the technology running in the Data Centers, you can add any cloud/Datacenter/Branch to the above mentioned list such as AWS native EC2s/ Azure/ GCP/ Baba or simply any private/public cloud workloads.

Also note that NSX DataCenter is not a prerequisite to connect sites/branches using SD-WAN by VeloCloud.

The Architectural Design of the end Solution:

The above design is showcasing a small portion of the full picture design where I can connect “n” number of Sites/Branches/Clouds using SD-WAN technology.

I started by connecting my site 1 in San Jose which happens to be backed by NSX-V with my other DC located in San-Fran backed by NSX-T.

Traffic egressing to the Internet/Non SD-WAN Traffic (Green) will go via the NSX ESG in case of NSX-V site and via the Tier-0 in case of the NSX-T site.

Branch to Branch traffic (in Purple) will ingress/egress via the VeloCloud Edge VCE on each site.

NSX-V Site:

In the San Jose site, I Peered the NSX-V ESG with the VCE using e-BGP. I also already had i-BGP neighborshop between the NSX DLR and ESG. The transit boundary in Blue you see in the image below is established by deploying an NSX logical switch attached to NSX-V ESG , NSX-V DLR and the VCE.

I redistributed routes learned via ibgp on the ESG to the VCE router using the ebgp.

Now the VCE_SanJose knows about the subnets/workloads that are residing south of the DLR.

I filtered the default originate that the ESG learned from its upstream from being distributed to VCE_SanJose as I dont want to advertise my default originate (default route) to other Branches/sites/Clouds.

Low level design on the NSX-V Site:

Based on the above,

Internet/Non-SD-WAN traffic path will be as follows

VM1–>DLR–>ESG–> Inernet/VLAN

SD-WAN traffic path :

VM1–>DLR–>VCE–> Internet. (Note that the VCE could have multiple ISP Links or MPLS links that will leverage the Dynamic Multipath Protocol Optimization known as DMPO).

VCE will build tunnels to a VMware VeloCloud hosted Gateways (VCGs) and to the Orchestrator (VCO). VeloCloud Gateways will be the VCEs distributed control plane and hence VCEs will learn about all other branches routes via the updates those VCGs send over. (refer to Image 1 to help you understand the path).

Now that we are done configuring the San Jose site, lets go and Configure the San Francisco NSX-T data center.

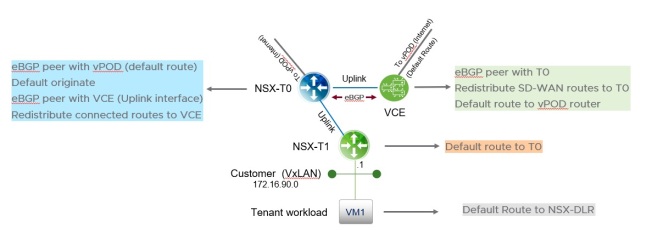

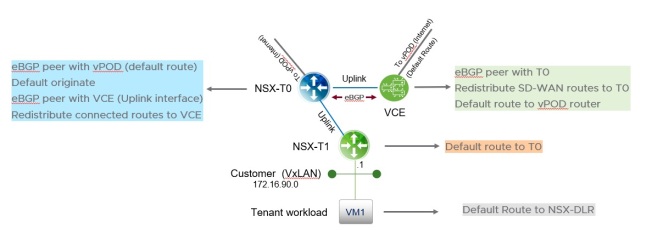

NSX-T Site:

A new Tier-0 uplink will be connected to an NSX-T Geneve Logical Switch. This Transit logical switch will also be connected to one of the VCE’s interfaces as Downlink.

On the NSX-T Tier-0 and VCE, we will build an e-BGP neighborship via the transit Logical Switch created. VCE will hence know about the routes being advertised from the Tier-0.

Note in NSX-T, Tier-1 Auto plumb all routes towards the Tier-0.

Now that the VCE knows about the San Francisco Routes, it will advertise them to the VCG that is again hosted somewhere on the internet by VMware VeloCloud.

Low level design on the NSX-T Site:

Internet/Non-SD-WAN traffic path will be as follows:

VM1–>Tier-1–>Tier-0–> Inernet/VLAN

SD-WAN traffic path :

VM1–>Tier1–>Tier-0–> VCE–> Internet.

Note that the VCE could have multiple ISP Links or MPLS links that will leverage the Dynamic Multipath Protocol Optimization known as DMPO.

VCE will build tunnels to a VMware VeloCloud hosted Gateways (VCGs) and to the Orchestrator (VCO). Gateways will be the VCEs control plane and hence VCEs will learn about all other branches routes via the VCG.

Now San Jose and San Francisco workloads know how to reach each other via SD-WAN.

Summary

The magic of SD-WAN is that we can add “n” number of sites with or without NSX and connect them via L3 seamlessly. For instance, I can connect 50 branches to those 2 DCs by deploying a VCE on each branch.

We can also use the DMPO technology to improve the Quality of Service of the traffic destined to branches. Business policies can also be enforced using the VCE.

Published by Wissam Mahmassani

I am a Senior Solutions Engineer for VMware in the Cloud Service Provider Program. I have 10+ years of Networking and security experience.

My primarily focus is on integrating NSX within the Cloud Service Providers . I work closely with various engineering teams and product managers within VMware to help provide feedback on usability, design and architecture. I use customer interactions and feedback to further help improve VMware NSX product offering.

View all posts by Wissam Mahmassani

Hi I am wondering if it would be possible in the NSX-T scenario to connect a VCE to a Tier-1 router instead of directly to Tier-0? This would be for a multi-tenant scenario where each tenant might require their own VCE.

LikeLike

Hi, Unfortunately today NSX-T Tier-1 cannot be attached to anything but a Tier-0. Auto-plumbing is done between the Tier-1(s) with its respective Tier-0.

You can, however, connect the VCE to a Tier-1 via logical switch attached to Tier-1. You will have to configure static Routes on the Tier-1/VCE as Tier-1 does not support dynamic routing today.

LikeLike

Hi thanks for the reply! Yeah, my thought was something like this:

Public Internet —> Tier-0 —-> Tier1—->Logical Switch—>Tenant1 VCE—>logical switch—>Tenant VM’s.

With this scenario could you use the VCE as the L3 gateway for the tenant VM’s instead of the Tier1 router?

Do you have any experience or resources for deploying VeloCloud with NSX-T and VMWare Integrated Openstack?

Thanks!

LikeLike

Yes absolutely… I actually did that for VMC (VMware on AWS) environment. You workloads will have a default route pointing to the VCE, VCE will have a default route towards T1 . T1 routes are autoplumbed to T0 –> internet.

I dont have experience with Openstack, however I would suggest if you are a cloud service provider to deploy a VCG (VeloCloud Gateway) on prem. VCG is multi-tenant supporting VRF/Tenant VLAN and you can deploy it north of your Tier-0. You can could dedicate a T0 per tenant (up to 80 per NSX manager domain) and perhaps get rid of T1s as T0 has DR component that is distributed.

Cheers,

Wissam

LikeLike

Hi great reading your ppost

LikeLike